Click Here to View This Page on Production Frontend

Click Here to Export Node Content

Click Here to View Printer-Friendly Version (Raw Backend)

Note: front-end display has links to styled print versions.

Content Node ID: 423244

After Universal Avionics solicited creative solutions from employees on implementing artificial intelligence (AI) during its 2023 Grand Challenge, the company’s first products featuring AI technology are coming to fruition.

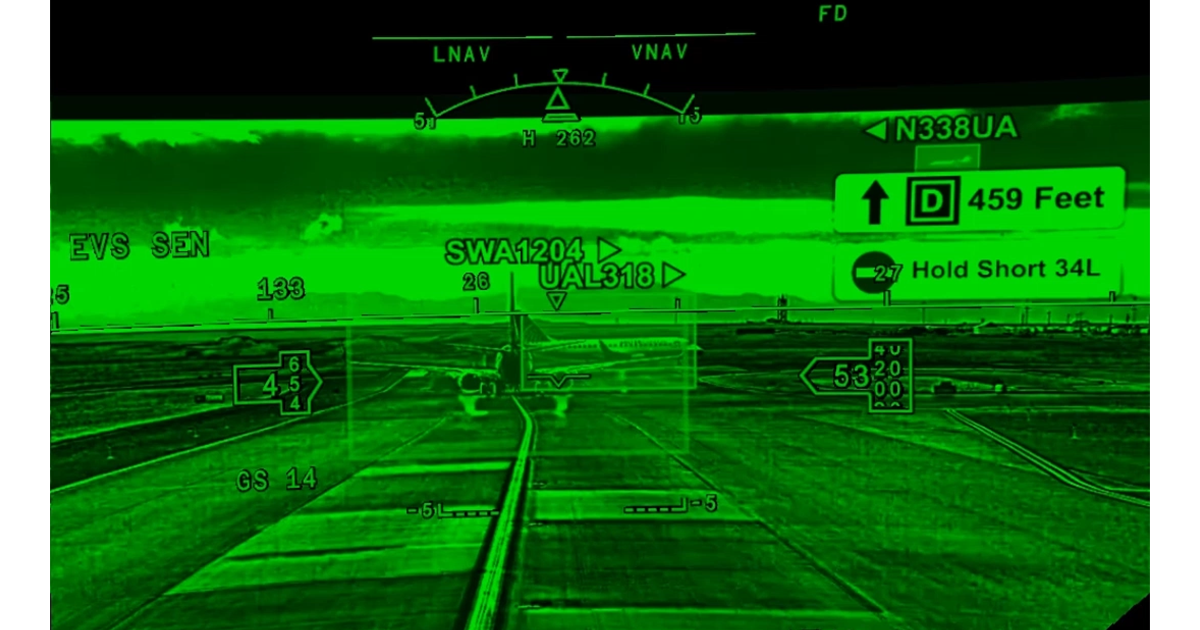

This week at NBAA-BACE 2024, Universal is demonstrating one of the concepts that resulted from the Grand Challenge—an AI-driven system that uses external camera inputs, audio capture, and ADS-B In information to paint a picture for the pilot of the situation at the airport while taxiing. The AI system taps into Universal’s FAA-certified Aperture visual management system, which delivers video inputs and imagery to flight deck displays and head-up displays (HUDs), including Universal’s SkyLens head-wearable display (HWD).

“We're going to develop these functions, which are AI-based, and incorporate them into our different product lines,” said Universal CEO Dror Yahav. “There is room for improvement of functionality and all of our product line using this disruptive technology.”

Where AI is being applied to Aperture is image content analysis. “We are training the system to identify objects in the EVS [enhanced vision system] image, and then, based on that, provide alerts to pilots,” he explained. “The more data we have, the better we will be able to offer new functionality and more accurate services.”

Data is captured from sensors such as Universal’s EVS-5000 multi-spectral camera mounted on the nose for HUDs and HWDs, as well as external cameras that are already installed on many aircraft on the tail, belly, and elsewhere. The system also captures audio from the aircraft’s communications radios.

“We are recording [this information], and also it can be used for debriefing purposes and accident prevention. But the main reason we did that was to enable continuous learning, machine learning [by the AI system],” Yahav added.

The recorded data isn’t only useful for post-flight briefing but more importantly to provide real-time assistance to pilots. The AI system can isolate and ingest com radio audio and it knows which instructions are for its aircraft.

For example, if a controller clears that aircraft for takeoff, the AI system recognizes the registration number that the controller uses in radio calls to the pilot. Any other communications to other aircraft can safely be ignored, because they are for different aircraft (although presumably there could be future applications where the AI detects what other aircraft are up to, for example, to help prevent an incursion or potential collision).

Once the AI captures the controller’s message, it is displayed in text on the upper right side of the display (either head-down on an instrument panel display or head-up on a HUD or HWD). The message could be something simple such as “Cleared for takeoff Runway 26,” or more complex: “Taxi to Runway 26 via Delta, hold short of Runway 26.” In the latter case, the text annotation is backed up by a graphic depiction on the display showing a magenta line for the taxi route and a flashing white line at the runway threshold to indicate the “hold short” instruction. On a head-down display or even an iPad, the line will be in color, but in a HUD or HWD it would be monochrome.

The view will also show other objects such as aircraft identified by their call sign or registration number and ground vehicles to help improve the pilot’s situational awareness. Once cleared for takeoff, the horizontal line at the threshold disappears.

Yahav explained that the system won’t display communications for other aircraft because that would just clutter up the display. “It's too much information. When you look at a full HUD image, it’s quite busy. We don't want to give information which is not particularly relevant.”

However, the pilot can still see other traffic on the head-down display, HUD, or HWD, even if it is obscured visually by inclement weather, thanks to ADS-B In, and it is easy to match that traffic with the display of its call sign via incoming radio calls.

Another technology that Universal is highlighting this week at BACE is voice activation incorporated into a software-based flight management system (FMS). The plan is not to use voice activation for simple tasks (such as a direct-to-command) but for complex situations.

One example is reprogramming an instrument approach or arrival procedure at the last minute, which takes a lot of button pushing on a traditional FMS control-display unit. The idea is that the software-based FMS will hear the radio call from a controller and automatically modify the flight plan, then simply ask the pilot to approve the change.

Meanwhile, Universal is tackling the problem of LED lights that are increasingly being installed in approach light systems because incandescent lighting is no longer available or too costly. Many EVS-capable aircraft can’t show LED lights because the system’s camera doesn’t include a sensor that can pick up LEDs, whereas incandescents are easy because they give off lots of heat that is easily detectable by thermal sensors.

So Universal’s EVS-5000 camera is designed to work with LEDs and has proven in flight tests that it can detect them in low-visibility conditions. This will allow operators to obtain operational credit for low-visibility landings where approach lights use LED lighting.

The EVS-5000 is installed on Textron Aviation Citation Longitudes equipped with Garmin’s GHD 2100 HUD, Dassault Falcons with Universal parent company Elbit’s HUD, ATR 42s and 72s (SkyLens HWD), Boeing 737NGs, and Embraer C-390s, among others. The ATRs have been approved for a 50% visual advantage (increase in forward visual range) that allows landings in lower visibility, and Universal expects further such approvals for EVS-5000-equipped aircraft.

Universal is also displaying its InSight Display System integrated avionics suite at BACE—not only at its exhibit but also at booths of Universal Avionics dealers Chicago Jet, StandardAero, Trimec, and Southeast Aerospace.

Installations of InSight are ramping up, Yahav said. “During the last two years, we’ve completed a big set of STCs.” These include the Falcon 20, 50, 900, and 2000EX; Citation VII; Hawker 800/A/XP; and Gulfstream III.

These aircraft models still “can fly for many years, the engines are good and being supported. But the weak link is the avionics, which become obsolete,” he said. Additionally, operators need advanced functionality that InSight adds, such as LPV approaches, controller-pilot datalink communications, FANS 1/A, ATN B-1, and ACARS.

Customers also want to connect their iPads to the avionics for database updates and downloading aircraft data, which InSight offers, as well as a flight operations quality assurance service.

“There are a couple dozen InSight systems installed,” Yahav said, “but many of the STCs are new and we expect to see a big funnel of customers doing upgrades.”