Click Here to View This Page on Production Frontend

Click Here to Export Node Content

Click Here to View Printer-Friendly Version (Raw Backend)

Note: front-end display has links to styled print versions.

Content Node ID: 400007

Imagine you’re a new military pilot about to refuel in the air, the first time on your own without the instructor. You’re flying in formation with a KC-46 tanker and in preparation for refueling, you slow down slightly and drop back behind the tanker, which still looms large in your view. The refueling drogue hangs from the tail of the tanker, beckoning you to move forward and capture it in the receptacle on the nose of your jet. You’re 100 percent focused on the task, making tiny movements with the controls, pushing and pulling on the throttle to try to mate the drogue with your airplane so you can take on some much-needed fuel. Suddenly, you realize that you got behind your airplane; something, maybe a bit of turbulence, caused you to shift slightly, and perhaps you over-controlled, and there’s no instructor on board to take over, and bam, midair collision with the drogue, uh-oh…

Or not.

It turns out that the KC-46 is a “virtual asset,” and your airplane is just fine. You’re flying formation with a 3D airplane that isn’t real but is dynamically interactive, in other words, it flies and acts like a real airplane. And while flying your airplane, you can interact in real-time with this virtual asset, allowing you to practice air-to-air refueling, dogfighting against an adversary, formation flying, bombing runs on fake targets, and other important training.

A Santa Monica, California-based technology company called Red 6 has developed this capability, which essentially means creating augmented reality (AR) 3D virtual assets that pilots can “see” while wearing a specially equipped helmet. What is significant about what Red 6 has accomplished is that the virtual assets are not just fixed in place but act as though they are physically moving in the real world. A virtual asset, in other words, that flies like a real aircraft, and that pilots flying real aircraft can interact with.

Red 6 was formed after a chance meeting in 2015 between Dan Robinson, Nick Bicanic, and Glenn Snyder. Robinson, a UK Royal Air Force Tornado pilot, is a graduate of the UK Fighter Weapons School (Top Gun equivalent), and the first non-American to fly the F-22 Raptor. He, Chris Randall, and Berkut designer Dave Ronneberg were building a Berkut composite, canard-configured experimental amateur-built airplane at Santa Monica airport. Bicanic (also a helicopter pilot) and Glenn Snyder were introduced to Robinson and a serendipitous conversation ensued. Snyder had been working on augmented and virtual reality projects and he showed Robinson one of his more interesting developments: a unique virtual reality (VR) race-car gaming environment.

This turned out to be a setup where two separate drivers in two real cars raced against each other, but one was in the U.S. and the other in the UK. In each car was a driver wearing VR glasses, which obscures the outside view, along with a safety rider. Inside the VR glasses, the drivers could see they were on a virtual racecourse, but they could also see each other’s car, virtually, so they could race against each other. In reality, they were each driving around spacious parking lots, separated by thousands of miles and connected via the internet. It was a wild idea that worked perfectly, and it planted a seed that led to the launch of Red 6.

When he saw the VR race car setup, Robinson asked Bicanic and Snyder whether this would be possible to replicate with airplanes. The answer was, “It’s technically difficult and a very significant [technology application], but we think it's possible.” But Robinson realized that “no one is going to allow fighter pilots to fly around in an entirely virtual world” while wearing vision-obscuring VR glasses, so then he asked if it would be possible to use AR to place virtual elements in the real world.

AR and VR

AR differs from VR in that the user can still see the outside world while wearing some sort of glasses or visor that projects the AR images onto the wearer’s eyes. With VR, the user can see only what is projected onto the screens in the glasses, which don’t allow any view outside.

The quick answer to Robinson’s question was “no,” because AR doesn’t work outdoors in dynamic environments. But this didn’t stop the line of thought that was quickly developing, and the three of them figured that if this outdoors AR problem could be solved, they could be at the forefront of developing a system that would open up new vistas in the training market, potentially saving military forces and other entities billions of dollars in training costs. Red 6 was born.

The essential problem for a military air arm is that it has to train pilots to fight against adversaries. And the adversaries have grown increasingly sophisticated, leveling the playing field among the largest and most well-funded countries, meaning that even more training is necessary. The typical solution is to hold exercises in which the blue air good guys dogfight against red air bad guys. The cost of running these training exercises is enormous, especially if it involves flying fighters from the UK, for example, for sessions to be held in Nevada. And, while the training itself is useful, the red air pilots are not learning how to be the good guys, so some of their time is spent inefficiently. Another problem is that it is difficult to simulate accurately an adversary’s fighter by trying to replicate its performance with a completely different airplane. Finally, the military is facing a pilot shortage, and this has a huge effect on training capability.

It turns out that the U.S. Air Force Research Laboratory's 711th Human Performance Wing has been working on this problem and came up with the Secure Live Virtual Constructive (LVC) Advanced Training Environment (SLATE). This allows pilots in real airplanes to shoot at computer-generated targets that are beyond visual range, along with simulators on the ground with computer-generated targets for adversary training. The problem with LVC, according to Robinson, is that it doesn’t allow the pilots flying real fighters to see and interact with virtual and constructive assets that are within visual range.

So Robinson contacted the Air Force, starting with its AFWERX research and development unit, which referred him to the Research Laboratory. In February, Red 6 hosted 12 visitors from the lab, the Air Force’s Test Pilot School, and Air Education and Training Command to test-fly an AR training sortie created by Red 6 using ground-based equipment, showing how it might work in the air. The test was conducted with the subject sitting inside Robinson’s Berkut, and the test involved a simulated AR sortie with aerial refueling and two-against-two fights against Russian adversaries.

The Air Force awarded Red 6 a $75,000 Small Business Innovation Research grant, and the Red 6 team then went to venture capital companies and raised a seed round of more than $2.5 million, led by Moonshots Capital. “That gave us our runway,” Robinson said, and this allowed the company to begin developing its Airborne Tactical Augmented Reality System (A-TARS).

The Air Force researchers really wanted to see the Red 6 A-TARS work in live flight and challenged Red 6 to, as Robinson said, “prove you can fix an object in space and lock it there and we can maneuver in relation to it.”

The Red 6 team started with a virtual framed cube, without sides, fixed in space. While wearing Red 6’s modified Gentex HGU-55 helmet, the pilot can see the virtual cube and fly around, over, and under it or through the sides. The Coupled Fusion Tracking-equipped helmet is fitted with a quick-release AR module and an external gold polycoated visor. A vital function is tracking the pilot’s head, and a hybrid-optical tracking system does this, separating eye tracking and head tracking functions to ensure sufficient fidelity. An avionics integration system (AIS) manages the system and ensures that what the pilot is seeing matches the desired VR asset. Then every 15 to 20 milliseconds, the CFT draws images to be projected onto the left and right eye, slightly different to account for stereo perception, thus giving the pilot an image that he can see superimposed on his view of the real world.

Demo Flight

Robinson decided to put his Berkut to work as the flight-test platform for A-TARS, because it is suited to the requirements for demonstrating fighter-like maneuverability. The single-engine, piston-powered Berkut is aerobatic and can withstand high g loads, so it is ideal for chasing virtual fighters through the sky, although obviously not at jet fighter-like speeds.

The Berkut is set up so both the front- and rear-seat occupants can wear the A-TARS helmet. Robinson does most of the demo flights, flying from the front seat. The rear seat’s flight controls are removed.

For A-TARS to work, the pilot must be able to see both the real world brightly enough when looking through the AR goggles and visor and also the virtual assets projected on his or her eyes. Another challenge for the Red 6 developers is making sure both are sufficiently bright to make flying safe and allow a good view of the assets.

The pilot’s peripheral view is also important. While the pilot’s field of view through the A-TARS helmet is essentially unlimited, that is, the pilot can look in any direction by turning his head, the peripheral view is limited. Presently, A-TARS allows more than 105 degrees of peripheral view.

The peripheral view is critical in fighter warfare because a pilot’s eyes normally can spot a moving object at the more-than-180-degree limits of ordinary eyesight. Robinson said, “We will achieve 150 degrees in the next 18 to 24 months. It’s not 180 degrees, but it’s close. And crucially, brightness would be off the charts and would not be restricted because we’re not using a traditional reflective surface [for the visor].”

AIN flew the demo on December 6, taking off from Camarillo Airport in southern California. The weather was gray and there were rain showers nearby, so it wasn’t optimal lighting for A-TARS. The helmet was comfortable and didn’t feel too heavy, but the view through the visor was somewhat dark, like wearing sunglasses.

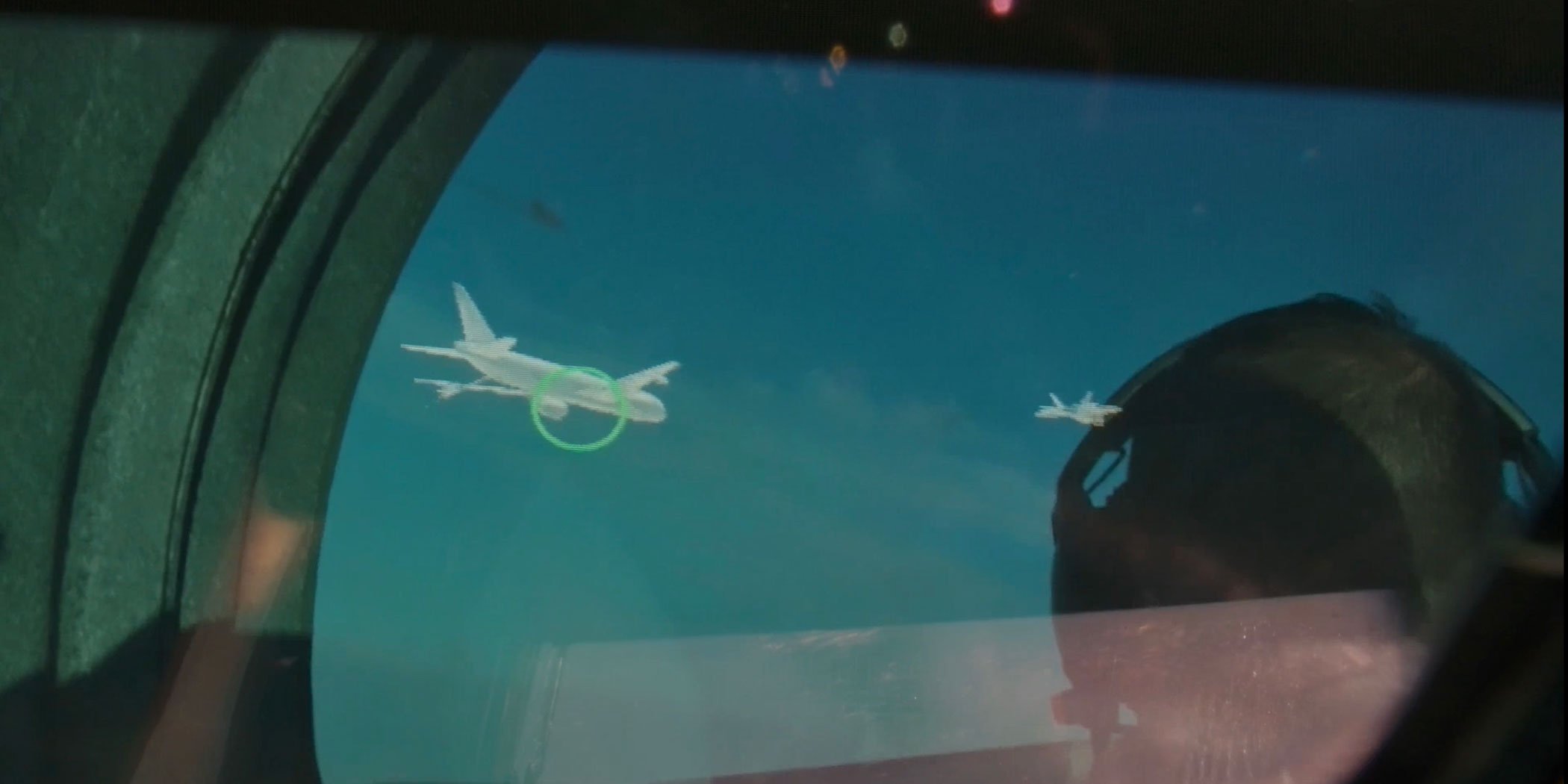

After takeoff, Robinson climbed to between 5,000 and 6,000 feet north of the airport. He first showed me the virtual 3D cube, then climbed and zoomed around the asset so I could see how that looked. Next, he pulled up alongside the KC-46 tanker, which was flying in formation with an F-22 Raptor. The images are monochrome, depicted in sort of a filled-in wireframe style and light-colored so they contrast well with the outside world. As we flew near the KC-46 and F-22, they acted as I would expect real airplanes to fly, growing larger as we flew closer, sliding away as Robinson banked, and so forth. After sliding over to fly next to the F-22, Robinson slowed the Berkut and slid us behind the tanker’s boom, as if preparing to mate with the drogue. The physics of what we were doing seemed completely natural.

Finally—and I was the first-ever to experience this—Robinson pulled up a Russian PAK FA future tactical fighter and pulled us into a 3-g bank as he chased it around the sky. We had to cut this air-combat portion short as rain was moving toward the airport. But it demonstrated how A-TARS can work for adversary combat training.

The behavior of the adversary can be modeled using pre-programmed scripted artificial intelligence, which is what I saw during the demo. Red 6 is working with a company that has developed artificial intelligence dogfighting algorithms as part of the Defense Advanced Research Projects Agency’s (DARPA's) Strategic Technology Office Air Combat Evolution program. But Red 6 could also incorporate simulators to provide the “virtual” element of the Air Force’s LVC, according to Bicanic, which would allow a pilot on the ground to “fly” as the virtual adversary asset.

A huge advantage of A-TARS is that because the virtual assets are computer-generated, it is possible to create any kind of flying machine. “If we have some intelligence [about the asset] and can code, you can train against anything you want,” said Robinson. “We could do a [Star Wars] X-wing or Millennium Falcon. It’s just code.”

What’s Next for Red 6?

Much of the work to make A-TARS commercially useful and capable of fulfilling the military and other missions remains to be done. The Red 6 team believes that A-TARS holds huge promise for improving pilot training at far lower cost than using real assets.

To continue developing A-TARS, Red 6 plans to raise more money, including a Series A fund in the first quarter of 2020. “What is the problem we are solving?” Robinson asked. “We’re putting virtual airplanes in the real world, not just for pilots, but to connect everyone together in an augmented battlespace. A-TARS offers an incredible opportunity to synthetically train across multiple domains.”

Ultimately, what Red 6 is really going after, according to Robinson, is eliminating the portable computers that we all carry, our smartphones. “The trillion-dollar market is spatial computing for consumers,” he said, “killing phones and manipulating things in the space around us. We have a pathway, a technology roadmap for display technology for the consumer market. How do we get there? The problem we’re solving [first] is allowing AR for the first time to be mobile and work outdoors. We have an incredibly compelling use case.”